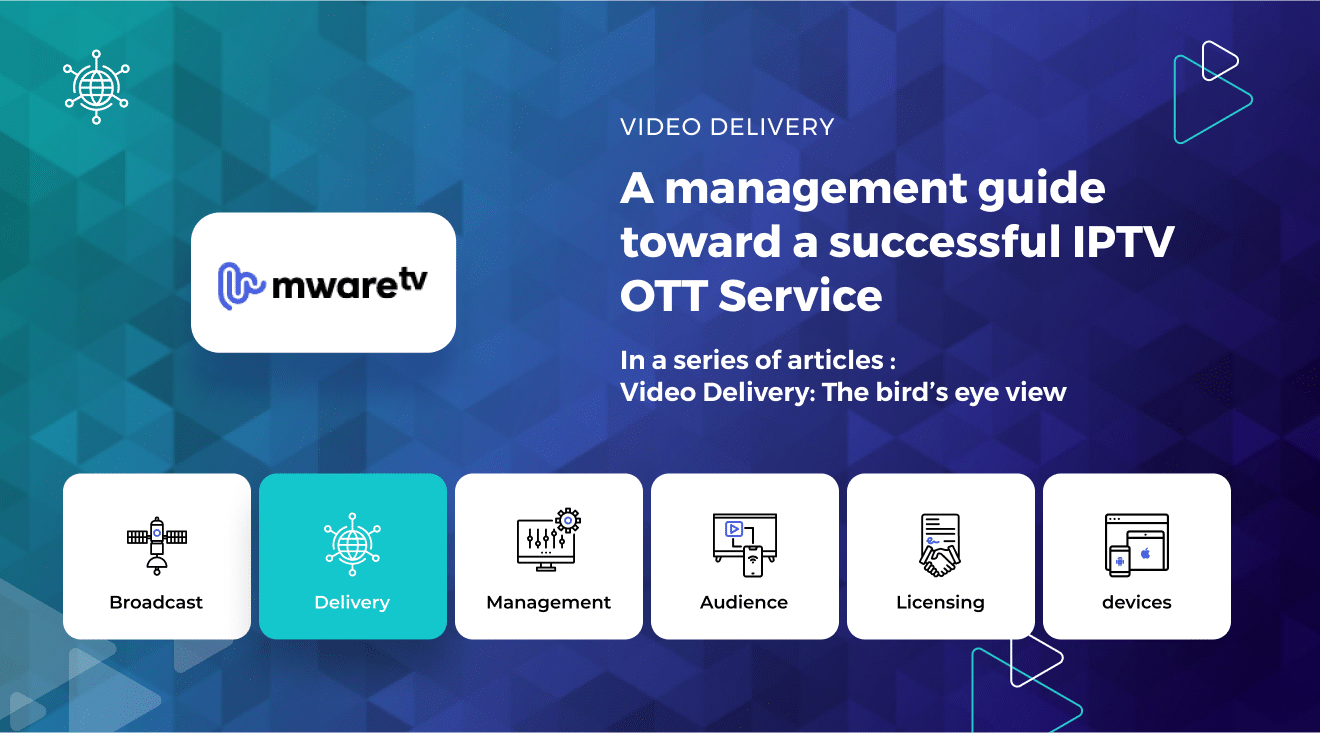

A management guide towards a successful IPTV OTT Service: Video Delivery: the bird’s eye view

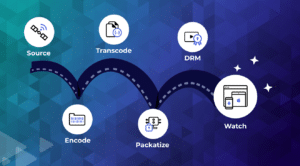

What Does the Video Pipeline Look Like?

Here’s a very quick overview of the video pipeline, and then in later sections of this article, we’ll dive into each of them and go deep. The assumption here is that we are going to be looking at an ABR-based video delivery service.

- The input to the Transcoder is an IP-stream (for Live) or a standalone file (for VOD), and it is configured to produce the appropriate bitrates and resolutions for the project under consideration. For example, if the content provider is streaming to Tier-2 and Tier-3 cities with low bandwidth conditions, then it is important to produce profiles with bitrates and resolution combinations (profiles) that can stream without buffering. In the first article of the series we described the Transcoding in more detail.

- The output of the Transcoder is sent to a Packager that generates individual segments for Adaptive Bit Rate (ABR)-based delivery. It additionally creates a Manifest file that contains the index and metadata of the generated segments and dictates the order of delivery.

- Additionally, if one wants to protect the content, technologies like DRM and Digital Watermarking are used to prevent piracy and to identify offending streamers. This is generally activated in the packager.

- The segments and associated manifest are stored on an Origin/HTTP server.

- The Origin/HTTP server is not designed for distribution to large amounts of end-users at many locations all over the world. For this purpose, a CDN, a Content Delivery Network is used (more info about the different types of CDN is covered in this article). The Origin server ingests the streams into the CDN which in turn will distribute the content to many pops, close to the deployment region of the end-users, where they are stored in cache for fast access and avoidance of load on the origin. A professional CDN like i.e. Akamai makes the distribution more reliable, easier to maintain, and 100% scalable. The URL to the manifest file is used by the player.

This is in a nut-shell the basics of most video streaming services today. Of course, there are additional services around this such as ad insertion, subscription and payment services, recommendation engines, QoE & QoS analytics that we shall look at in follow-up articles.

For now, let’s start with some fundamentals.

ABR Streaming Basics

The first thing that we shall look at is the concept of ABR video streaming. So, what is ABR and how does it work?

ABR streaming is a technology and a method of video streaming in which the video players are empowered to dynamically vary the quality and bitrate of the streaming video depending on the bandwidth and buffer levels of the player.

As we learned in the previous article, a video is typically transcoded into multiple bitrate and resolution combinations called profiles.

Each of these “copies” or variants of the video stream are split into equal-sized chunks called video segments and the order and size of each chunk are recorded in a file called a playlist or a manifest.

The chunks are stored on a server and the manifest is provided to the player. At every instant of time, the player checks its buffer levels and bandwidth conditions, determines which variant of the stream it can download, and uses the manifest to download that segment.

By adaptively varying the quality and bitrate of the video being streamed, the player can successfully adapt to varying bandwidth conditions and prevent annoying stalls and buffering events.

Now that we’ve discussed how ABR streaming works in principle, let’s take a look at how it is implemented in real-life scenarios. This brings us to the twin-topics of HLS (HTTP Live Streaming) and MPEG-DASH.

HLS and MPEG-DASH

HLS and MPEG-DASH and HTTP-based ABR streaming protocols that work on the principles we discussed in the previous sections. They function in a similar fashion and only the specifics of their design are different. Let’s look at both these protocols now.

HLS is a chunk-based, HTTP-based ABR streaming protocol developed by Apple to stream videos to their Apple ecosystem. It can be used for both Live and VOD streaming and is a very popular protocol that supports a lot of features such as ad insertion, DRM (via Apple FairPlay Streaming), low-latency streaming via LL-HLS, and more!

MPEG-DASH: In a bid to standardize ABR streaming, the MPEG body issued a CFP in 2009 for an HTTP-based Streaming Standard. This soon led to the creation of the MPEG-DASH standard which was published in April 2012. Since then, it has been revised in 2019 as MPEG-DASH ISO/IEC 23009-1:2019. Similar to HLS, MPEG-DASH also supports ad insertion, sub-titles, DRM, low-latency streaming via LL-DASH, and more!

While there are other streaming protocols such as HDS, MSS, it is seen that the HLS and MPEG-DASH protocols are the predominant ones that reach the most number of devices in the streaming ecosystem.

Live vs. VOD Video Streaming

As we know, both HLS and DASH can be used for both Live and VOD streaming. But do they work the same way? Is there any difference?

Yes and no!

While the fundamentals of ABR streaming remain the same for Live and VOD when you use HLS or DASH, there is a difference when it comes to Live.

In VOD, you have the assets ahead of time, and can compress, package, and deliver them after the entire library has been processed. However, with Live streaming, the video packets are available continuously and have to be processed and delivered in a short period of time to maintain the concept of live streaming. This implies that the architecture and system configuration for live streaming needs to be more robust, better provisioned (considering traffic spikes) than VOD streaming.

So why are HLS and DASH so popular?

One of the reasons why these protocols are popular is because of their use of video segments and the HTTP protocol. This allows a reasonably powerful computer to be set up as a streaming server and with the use of a CDN, videos can be reliably delivered to the end-user.

This brings us to the next topic – CDNs or Content Delivery Networks. Let’s understand how these are used effectively to improve the quality of video streaming.

Content Delivery Networks

CDNs or Content Delivery Networks are made up of strategically located servers in a particular network, region or worldwide that cache or store copies of your video chunks/segments and serve it to viewers. The use of a CDN is very important in video streaming, because they improve the streaming performance by caching files closer to users. This means that the players do not have to go all the way to the webserver to get the segments and this ensures that the video doesn’t buffer as often and the server does not crash under high loads. It furthermore limits the traffic in the backbone of the network.

What are the advantages of using a CDN?

- The traffic spikes are absorbed by the CDN which generally does not allow requests to go to the origin server. Thus with a relatively smaller infrastructure and a CDN, you can effectively serve a large audience.

- CDNs can prevent your service from being taken down by DDoS attacks.

- Since CDNs are often geographically distributed, they tend to be closer to your end-users than your origin servers. Thus, using CDN results in lower latency and faster startup times.

What kind of CDNs are there?

Well, you can generally classify CDNs into three categories –

- Public CDNs: Companies like Akamai, CloudFront offer CDNs as a service, or a Platform As A Service. They have a large network of edge caches, PoPs around the world and offer a lot in terms of features and service.

- Private CDNs: here, you build your own CDN in your data center and a network with PoPs. This can be a good strategy if you have massive traffic that is not economical with Public CDNs. It is also a good option if none of the public CDN providers service your geographical area of interest. However, you need to have a team to set up, operate, and maintain the infrastructure.

- Hybrid CDNs: here you maintain a mix of Public and Private CDNs and use them as appropriate based on the need (traffic, geo, scalability, etc.).

Digital Rights Management

Digital Rights Management (DRM) is a technology that is used to protect your streaming content from piracy and it provides the flexibility to choose how your content is consumed via licensing & business rules. You can set rules to,

- block people from certain countries, ISP, etc.,

- prevent a user from screen capturing,

- block free users from accessing premium content,

- block playback on specific devices,

- Establish rental periods for content (i.e., a time limit within which a person has to watch a movie).

- And much more!

Commercially, there are many trusted DRM technologies such as Microsoft’s PlayReady, Google’s Widevine, and Apple’s FairPlay. And there are DRM vendors who provide additional infrastructure around these DRM solutions by adding more business rules, analytics, and tools for publishers.

DRM technologies basically work as follows:

- Every video stream is encrypted using AES-128 and the encryption keys are stored safely on a DRM server.

- When the player wants to play a protected video, it contacts the DRM server for the decryption keys.

- After the DRM server authenticates the request, it provides the keys to the player.

- The keys are used by a protected software (CDM) in the player to decrypt, decode, and deliver the video to the screen.

DRM solutions are meant to prevent streams from being stolen. However, if your streams are stolen, then how do you identify who leaked them? This is where Forensic Watermarking comes into the picture. Using this technology, it is possible to identify which one of your streaming subscribers captured and leaked the video because every streaming session is unique.

CatchupTV

Several streaming service providers also have the capability to record a live transmission and provide it back to the users in the form of a VOD asset. This is a very popular feature and is used to allow users to watch sporting events, concerts, news, and live shows after they have taken place. This is referred to as CatchupTV and is a fantastic option to increase engagement, repeat viewership, and virality.

While this might appear easy to provide, there are complexities that a streaming service must consider. For instance, does a streaming service re-encode the captured video to make it more economical to stream as a catchup video? Or, if there are ads inserted into the video, should they be removed before playing them back?

Cloud PVR

Another feature that some streaming services provide is Cloud PVR (Personal Video Recording) that allows a subscriber of the service to record a show or event onto a cloud storage facility provided by the streaming service. It then allows the subscriber to watch it at a later time of their choosing. Typically, such facilities come along with restrictions in the form of the number of shows you can record, storage limits, time limits after which the content might be removed from the account, and so on.

Video QoS & QoE

Finally, in order to bring everything together and ensure that the system is running well, you need to collect data at various points of the eco-system, examine them, and compare them against known standards or user-experience measurements. The outcome of this continuous exercise is then used to tune the entire video delivery pipeline.

Video Quality of Service (QoS) typically refers to the measurement of latency, CDN metrics like cache hit or miss ratios, performance of the authentication systems, etc. This refers to the overall performance of the system.

Video Quality of Experience (QoE) is a term used to describe the quality of user’s experience and tie that back to the system’s performance. Metrics such as start-up delay, buffering, video quality fall under this bucket.

QoE & QoS are typically measured using probes or dedicated software installed at various modules of the ecosystem and are fed to the central data lake where they are cleaned, joined, and correlated in order to improve the pipeline.

Until now, we’ve seen the various components that make up a typical video streaming pipeline. But, how does one use this knowledge to design a video streaming service? What are the considerations?

Let’s look at this next!

Designing a Resilient Video delivery Pipeline

Now that we know the building blocks of video delivery (transcoding, packaging, storage, CDNs, playout, and DRM), let’s look at some of the considerations for designing the infrastructure.

With too many components available, how do you know which one to pick? How do you scale it? How do you host it? What infrastructure is needed?

Something that pops and grabs our attention is the need for coordination across the entire pipeline. Everything has to work together, in tight synchronization to ensure that the video is delivered in real-time to the end-user without any delays, buffering, latency, etc.

What do we mean by that? Let’s understand by taking a few real-world examples.

Mismatch between the web server configuration and the traffic.

Let’s assume that you are streaming a football game and you’ve configured your servers for a live audience of 1,000 viewers and you don’t expect more than that. However, the game turns interesting and all of a sudden, there are 20,000 viewers on your live stream.

Is your infrastructure configured to handle such a spike in traffic? What can go wrong? Well, your web server could crash, you could exceed your CDN commits, or your authentication system can go down if it can’t scale either. All of this can be avoided if you can do a bit of research ahead of time to understand how your traffic is likely to vary and design your system in a way that it can scale.

Mismatch between the bandwidth available and the ABR profiles

Suppose you are streaming to a country with relatively poor bandwidth conditions, spotty 4G, and predominantly 3G connectivity. And, you decide to stream 1080p 10mbps as your highest profile in your bitrate ladder, and most of your bitrate ladder is above 3 or 4 mbps. With such a bitrate ladder, your streaming is bound to be plagued by stalls, buffering, and start-up latency issues.

And this is because you did not do the research and choose bitrates that suit the available bandwidth in your target geographies.

For such a location, you would do well to choose most of your bit rates below 3 mbps and ensure a smooth streaming performance. And for this, it’s best that you have data from an analytics solution.

What this solution goes to show is that even the best-laid plans can be defeated by the last mile bandwidth conditions and it helps to be prepared!

Video Quality Monitoring

Another common problem with poorly configured infrastructure is not monitoring fluctuations in the input to the encoder or the output of the encoder. How is this important? Well, if your input stream is disrupted or falls for 10 mins, then the end-user cannot see anything for those 10 mins, right? After that, if the stream recovers then everything is good and things carry on as normal.

But, if you have cloud DVR (recording), then that glitch stays on forever! There is no way to avoid that glitch in the VOD-version of the live stream.

To conclude, several aspects related to a high Quality of Service. They are all related to each other and should be aligned for the best result. Specialists in this field can help to launch and scale your service rapidly.

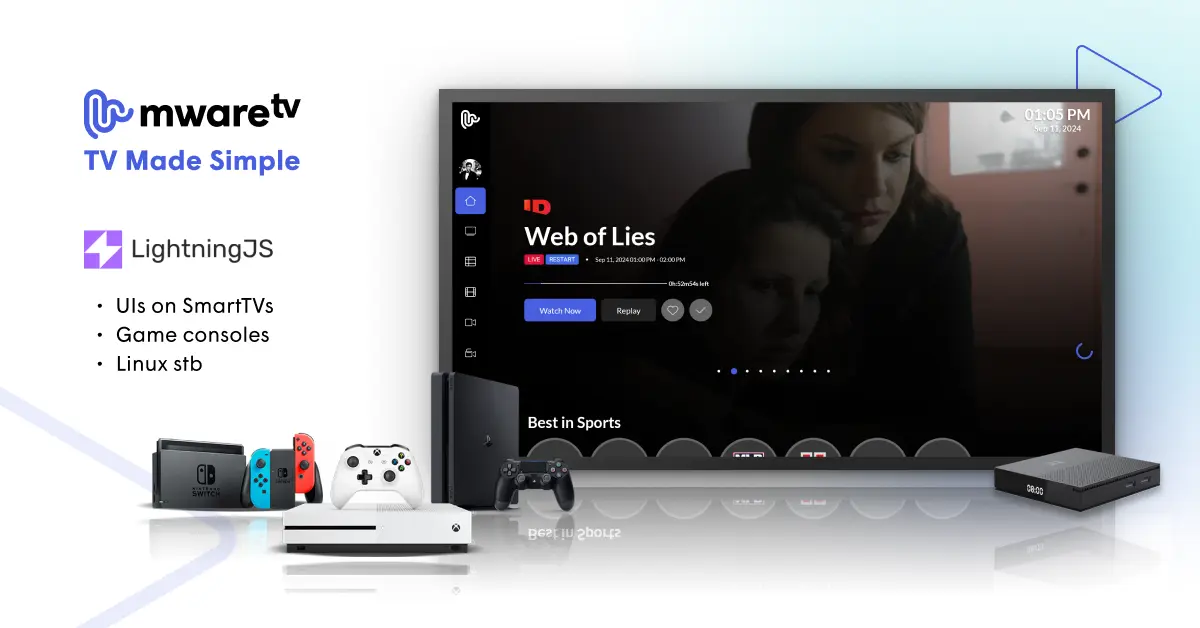

What MwareTV could do for you

MWareTV offers several solutions for content delivery / streaming infrastructures. Our experts support you to implement (configure, test & deploy), monitor and maintain the system setup to assure Quality of Service and timely scaling. Combined with the MwareTV Management System and Apps it completes your platform to offer the TV based services to your audience.